Update hyper parameter example and add documentation

Showing

- docs/hyperparameters.txt 59 additions, 0 deletionsdocs/hyperparameters.txt

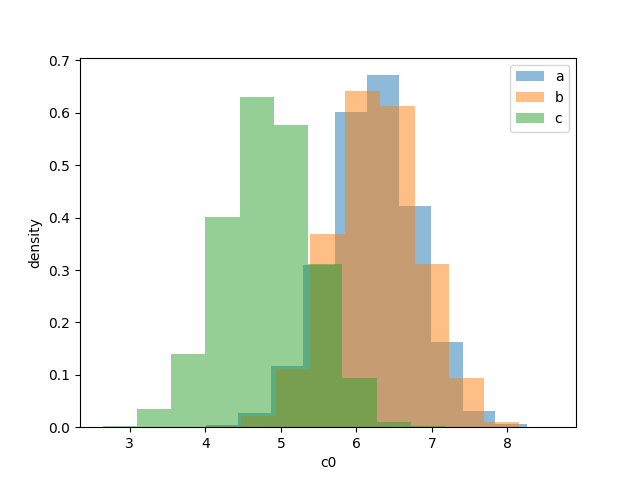

- docs/images/hyper_parameter_combined_posteriors.png 0 additions, 0 deletionsdocs/images/hyper_parameter_combined_posteriors.png

- docs/images/hyper_parameter_corner.png 0 additions, 0 deletionsdocs/images/hyper_parameter_corner.png

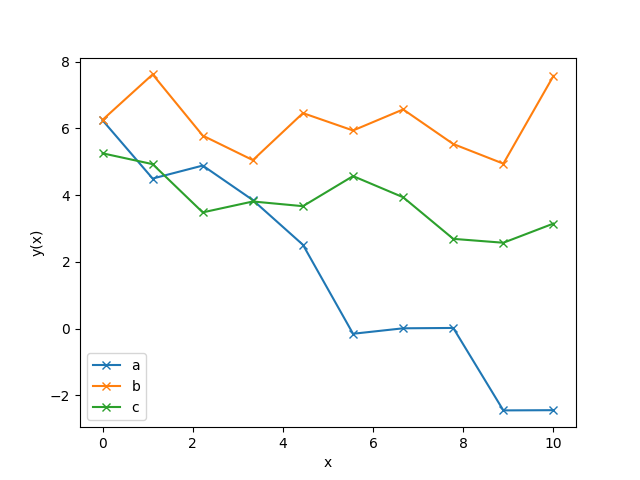

- docs/images/hyper_parameter_data.png 0 additions, 0 deletionsdocs/images/hyper_parameter_data.png

- docs/index.txt 1 addition, 0 deletionsdocs/index.txt

- examples/other_examples/hyper_parameter_example.py 81 additions, 41 deletionsexamples/other_examples/hyper_parameter_example.py

docs/hyperparameters.txt

0 → 100644

11.6 KiB

docs/images/hyper_parameter_corner.png

0 → 100644

121 KiB

docs/images/hyper_parameter_data.png

0 → 100644

30.1 KiB